How Many Watts Does a Projector Use? The Complete Guide

If you're thinking about creating a true big-screen experience at home, one of the first practical questions you’ll ask is, "How much electricity does a projector actually use?" It's a valid concern. For years, projectors had a reputation for being hot, power-hungry devices, and it’s easy to wonder if that movie night will send your electricity bill soaring.

The good news is that modern projectors are a world away from those old models. But finding a single "wattage" number can be frustrating because the answer truly depends on the type of projector.

This guide will give you a clear, straightforward answer. We'll break down the wattage you can expect from different models, explain what factors control that power draw, and finally settle the big debate: does a projector use more electricity than a big-screen TV?

The Short Answer: Projector Wattage at a Glance

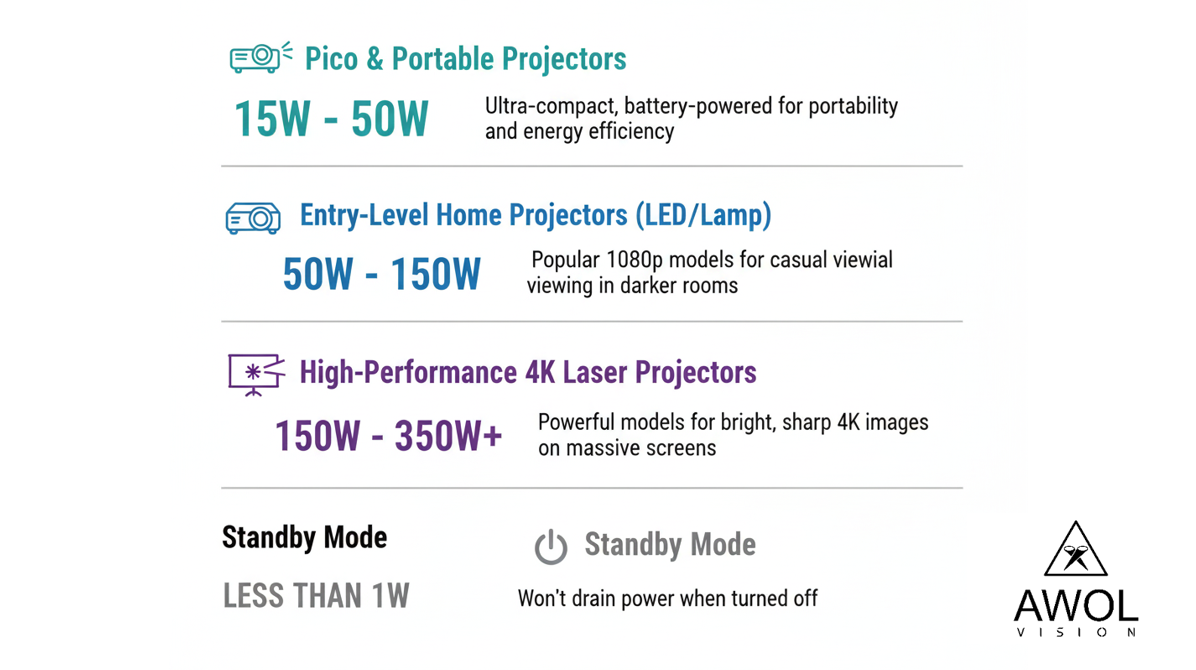

Let's get straight to the point. While every model is different, projectors generally fall into three power consumption categories.

- Pico & Portable Projectors: 15W - 50W. These ultra-compact, battery-powered devices are designed for portability and prioritize energy efficiency over maximum brightness.

- Entry-Level Home Projectors (LED/Lamp): 50W - 150W. This category covers many popular 1080p LED projectors designed for casual viewing in darker rooms.

- High-Performance 4K Laser Projectors: 150W - 350W+. These are the powerful models designed to replace your primary television. Their higher wattage is necessary to produce an incredibly bright, sharp, and vibrant 4K image on a massive screen (100 inches or more), even in a well-lit room.

- Standby Mode: Nearly all modern projectors consume less than 1W in standby mode, so they won't drain power when turned off.

Why Isn't There One Simple Answer? 4 Factors That Control Power Use

You can see the range is quite wide. That's because a projector's power consumption isn't a random number; it's a direct result of its technology and performance capabilities. Here’s what makes the biggest difference.

1. Light Source Technology: The Biggest Power Factor

The single most significant factor in a projector's energy use is the technology that creates the light.

- Traditional Lamps (UHP): For a long time, most projectors used ultra-high-performance (UHP) lamps. These are powerful, but they generate a lot of heat and consume a considerable amount of electricity—often 250W to 350W or more. They are the primary reason projectors got their "energy hog" reputation.

- LED: LED projectors are far more efficient. They use less energy to produce the same level of brightness and generate much less heat.

- Laser: Sitting at the pinnacle of modern projector technology, laser light sources offer the best of both worlds. They are incredibly energy-efficient while also being capable of producing extreme brightness and vibrant colors. This is the technology used in premium, TV-replacement projectors.

2. Brightness (Lumens): The Cost of a Vibrant Picture

Brightness, measured in lumens, is critical for image quality, especially if you plan to watch with the lights on. It’s a simple trade-off: the brighter the image, the more power is required. A 3,500-lumen projector needs more energy to light up a 120-inch screen than a 500-lumen portable model does for a 50-inch image. This isn't a flaw; it's the necessary power to deliver a stunning, cinematic picture that doesn't wash out.

3. Resolution: The Demand for 4K Detail

Rendering a crisp, detailed image requires processing power. A 4K projector has to manage over 8 million pixels, four times the amount of a 1080p projector. This extra work from the internal components requires more electricity to deliver the ultra-sharp picture you expect.

4. Operating Mode: Finding a Balance with Eco-Mode

Most projectors offer different power modes. The standard or "bright" mode will run at the device's listed wattage to produce maximum brightness. However, nearly all models also include an "Eco Mode." This setting reduces the light output slightly to lower power consumption, extend the light source's life, and often quiet the cooling fans.

Do Projectors Use More Electricity Than a TV?

This is the comparison most people are really thinking about. It's a common myth that a big-screen projector is always less efficient than a TV. The truth is, it depends entirely on how big you want to go.

Let's be perfectly clear: if you are comparing a 55-inch TV to a projector creating a 55-inch image, the TV will almost certainly use less power.

But nobody buys a high-performance projector for a 55-inch screen. You buy one for a massive, immersive, 100-inch, 120-inch, or even 150-inch cinematic experience. And when you start comparing that to a television of a similar (though often unobtainable) size, the efficiency argument flips.

The Best Way to Compare: Introducing "Watts-Per-Inch"

The most logical way to compare two completely different displays is to see how efficiently they create their image. We can do this by calculating the watts used per diagonal inch of screen.

The formula is simple:

Total Wattage / Diagonal Screen Size = Watts-Per-Inch

Let's look at a real-world example in the projector vs. TV debate:

- Scenario 1: A Premium 85-Inch OLED TV. A popular high-end model might consume around 300W during typical 4K HDR playback.

- Calculation: 300 Watts / 85 Inches = 3.52 Watts-Per-Inch

- Scenario 2: A Modern 4K Laser Projector. A high-performance model like the AWOL Vision LTV-3500 Pro uses about 320W to create a brilliant 120-inch image.

- Calculation: 320 Watts / 120 Inches = 2.66 Watts-Per-Inch

The result is clear. To create a truly massive and immersive picture, the high-performance laser projector is significantly more energy-efficient on a per-inch basis. You get a much bigger screen for a more efficient use of power.

How to Calculate the Real-World Cost of Running Your Projector

Wondering what these wattage numbers mean for your wallet? You can calculate the cost in two simple steps.

Step 1: Find Your Projector's Wattage

You can usually find the power consumption (listed in Watts or "W") in one of these places:

- Printed on the power brick/adapter.

- On a sticker on the bottom of the projector itself.

- In the "Specifications" section of the user manual or on the manufacturer's official website.

Step 2: Use the Simple Cost Formula

The calculation to find the cost is:

(Wattage / 1000) x Hours of Use x Cost per kWh = Total Cost

Your "cost per kWh" is on your monthly utility bill. The U.S. average is around $0.17 per kWh.

Let's use our 320W high-performance projector as an example, assuming you watch it for 4 hours a day and pay the national average electricity rate:

- (320W / 1000) = 0.32 kW

- 0.32 kW * 4 hours = 1.28 kWh per day

- 1.28 kWh * $0.17 = $0.22 per day

Running this powerful 4K projector for four hours a day would cost you less than a quarter per day, or about $6.60 per month.

Your Projector Power Questions, Answered (FAQ)

Will a projector significantly increase my electricity bill?

No. As shown above, even a high-performance 4K laser projector costs only a few dollars per month for daily use. It is comparable to, and often more efficient than, a large-screen TV, and is not considered a high-draw appliance like an air conditioner or electric heater.

Is it practical to use a 4K laser projector as an everyday TV?

Absolutely. Modern laser projectors are designed for this exact purpose. They are bright enough to use in daylight, have a light source life of 25,000+ hours (lasting over a decade of regular use), and are often better for your eyes due to the nature of reflected light.

How much power do I need to run a projector outdoors?

This depends on your projector. A small portable projector (20W-50W) can run for hours on a small portable power station. For a high-performance home projector (150W-350W), you would need a larger solar generator or power station with at least 500Wh of capacity for a full movie when creating an outdoor home theater.

Which uses less electricity: a bright 4K projector or a large OLED TV?

For screen sizes over 85 inches, a bright 4K laser projector often uses less electricity per inch of screen than a comparable large OLED TV. While the projector's total wattage may be similar, it produces a much larger picture, making it more efficient at delivering a cinematic experience.

Be the First to Know

Subscribe for special deals, news, and important product information, and get your exclusive $50 discount.